Overview

From pbrt I quote:

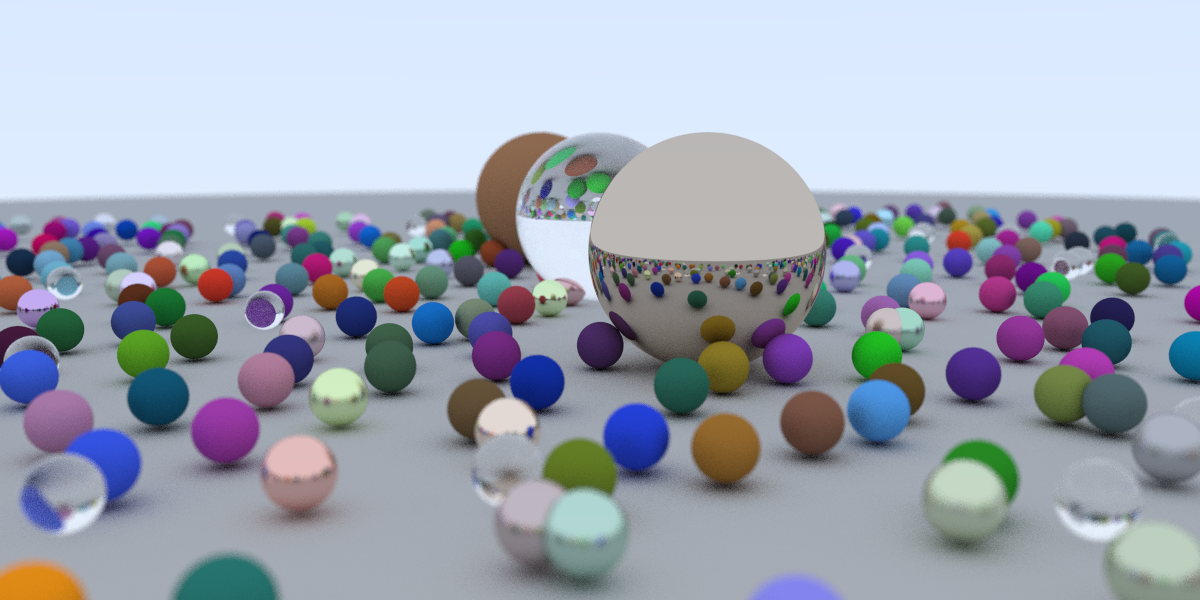

Although there are many ways to write a ray tracer, all such systems simulate at least the following objects and phenomena:

- Cameras: …

- Ray–object intersections: …

- Light sources: …

- Visibility: …

- Surface scattering: …

- Indirect light transport: …

- Ray propagation: …

As shown in the diagram below, from the camera we shoot a view ray towards every pixel in image space and track how they interact with objects/lights in the scene. And finally we have a color value for every single pixel.

Personally I learned rasterization before learning ray tracing, internally they have opposite philosophy: from the world to your eye or how your eye sees the world. And compare to all the approximation and interpolation method we use in rasterization, ray tracing is a more intuitive, realistic and yet resource consuming way for image rendering.

Ray

Since it’s called ray-tracing, obviously we need to represent a ray. A ray is denoted by formula

Accordingly we have a Ray class

|

|

Camera

Contrary to rasterization, most of the operations we have is in world space, thus we need to transform screen space pixel coordinates into world space and shoot a view ray accordingly.

$u, w, v$ represents axes of camera space, which is also right-hand. Then vfov and aspect_ratio determines the view frustum. Then we have a Camera class:

|

|

Ray-Object Intersections

Base class

We create a HitRecord struct to keep record of the hit information we need. And a virtual base class for other objects that can be hit.

|

|

Sphere

For now we only have one primitive geometry - sphere, which make the intersection math very simple, we know a sphere is denoted by formula:

And we put $\mathbf{P} = \mathbf{P}(t) = \mathbf{O} + t\mathbf{D}$ into the equation:

For $at^2 + bt + c = 0$ we have

where $b^2−4ac$ is the discriminant of the equation, and

- If $discriminant<0$, the line of the ray does not intersect the sphere (missed);

- If $discriminant=0$, the line of the ray just touches the sphere in one point (tangent);

- If $discriminant>0$, the line of the ray touches the sphere in two points (intersected).

Then we have the Sphere class

|

|

List of hittable objects

For multiple objects in the scene we can have a list of these objects as it’s one giant object.

|

|

Material

A material basically describes what an object will do to the ray when the ray hits the object - how it reflects, refracts and so on. We design a interface scatter to describe such behavior.

|

|

Lambertian

Metal

Dielectric

Antialiasing

Without antialiasing the image tend to have noisy and alias, we simply do a uniform-random sample in a square without considering the sample PDF(probability density function) :

|

|